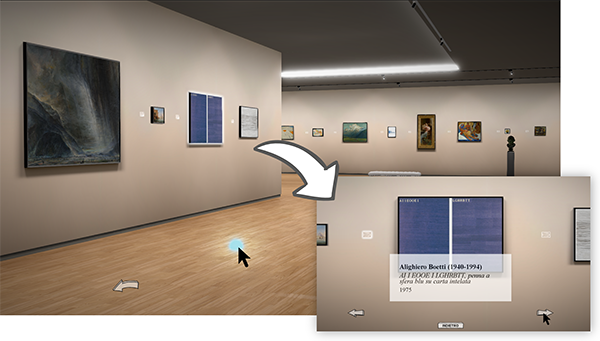

Img. 1 - Muvir

Our latest applications have been a series of testbeds in user interaction, experiencing different solutions in Blend4Web:

Virtual Pad (HUD), the first solution adopted, has been subsequently discarded for both producing an overwhelming presence of virtual buttons on the screen, when too many interactions with the the 3D environment are foreseen, and a difficulty in performing the actions, since the user has to manage and understand the graphic interface.

The Virtual Pad, instead, can be efficiently adopted for controlling a portion of the functionalities needed at app level, such as graphic quality, interface type, pause, back function.

In a particular case 2D virtual buttons were adopted for an interaction in “gallery” mode, in order to shift from 3D to a detailed perusal of the works of art, enabling the movements from object to object in camera target mode instead than in FPS mode (Img. 1).

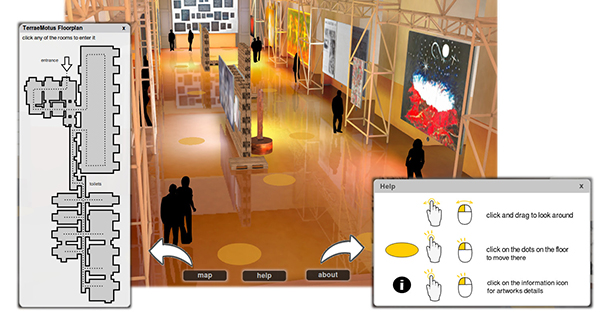

Img. 2 - Terrae Motus Virtual Exhibition: The main view alongside with the gallery map and a short help

In some occasions global point and click navigation is too complex and, even, redundant.

For example, in a virtual museum where the navigation is inside a limited area, a predefined walkthrough is a better solution, easily implementable and usable.

For the on-line Exhibition Terrae Motus,

at the Royal Palace in Caserta, the navigation is a point to point one, thanks to clicks on 3D objects placed inside the scene, such as coloured discs set on the virtual floor nearby the works of art (Img. 2).

In this deployment as well, Blend4Web APIs helped the development.

A point and click navigation has been adopted also for simple HTML exports. The logic node editor included in Blend4Web enabled the creation in Blender of clickable areas inside the scene and link them to animations and camera movements avoiding any code scripting. These areas, set on the floor or on huds linked to the camera, allow a simple navigation of the scene solely by using the logic blocks.

Img. 3 - Muvi. Virtual Museum of Daily Life in XX century

Another line of development led us to a Web app for cardboard VR with a point and click/point to point navigation. The challenge was to find a way for interacting with the 3D scene by using the only button available on cardboards and working with a stereoscopic camera. The Blend4Web APIs for HMD, based on WebVR, were used for changing the point of view by rotating the head. The point and click navigation inside the scene developed up to that moment was unusable due to lack of compatibility of the Blend4Web APIs and the stereo camera (VR object picking was released only in the APIs in December 2016). Furthermore, the cardboard button clicks on the screen on a fixed position dependant on the kind of visor. Therefore, at the time, we had to resort to a collision system among a collision test ray issued by a point in between the left/right cameras and the 3D objects that trigger the camera movement inside the scene.

made with Blender and Blend4Web